Top 12 Quotes from Bezos' 2016 Letter to Shareholders

At over 4000 words, Jeff Bezos' 2016 letter to Amazon shareholders (posted last week) has a lot to say. While I highly recommend tech executives and investors read the entire thing, here are my top ten excerpts from the letter:

At over 4000 words, Jeff Bezos' 2016 letter to Amazon shareholders (posted last week) has a lot to say. While I highly recommend tech executives and investors read the entire thing, here are my top ten excerpts from the letter:

1. Our growth has happened fast. Twenty years ago, I was driving boxes to the post office in my Chevy Blazer and dreaming of a forklift.

2. This year, Amazon became the fastest company ever to reach $100 billion in annual sales. Also this year, Amazon Web Services is reaching $10 billion in annual sales … doing so at a pace even faster than Amazon achieved that milestone.

3. AWS is bigger than Amazon.com was at 10 years old, growing at a faster rate, and – most noteworthy in my view – the pace of innovation continues to accelerate – we announced 722 significant new features and services in 2015, a 40% increase over 2014.

4. Prime Now … was launched only 111 days after it was dreamed up.

5. We also created the Amazon Lending program to help sellers grow. Since the program launched, we’ve provided aggregate funding of over $1.5 billion to micro, small and medium businesses across the U.S., U.K. and Japan

6. To globalize Marketplace and expand the opportunities available to sellers, we built selling tools that empowered entrepreneurs in 172 countries to reach customers in 189 countries last year. These cross-border sales are now nearly a quarter of all third-party units sold on Amazon.

7. We took two big swings and missed – with Auctions and zShops – before we launched Marketplace over 15 years ago. We learned from our failures and stayed stubborn on the vision, and today close to 50% of units sold on Amazon are sold by third-party sellers.

8. We reached 25% sustainable energy use across AWS last year, are on track to reach 40% this year, and are working on goals that will cover all of Amazon’s facilities around the world, including our fulfillment centers.

9. I’m talking about customer obsession rather than competitor obsession, eagerness to invent and pioneer, willingness to fail, the patience to think long-term, and the taking of professional pride in operational excellence. Through that lens, AWS and Amazon retail are very similar indeed.

10. One area where I think we are especially distinctive is failure. I believe we are the best place in the world to fail (we have plenty of practice!), and failure and invention are inseparable twins.

11. We want Prime to be such a good value, you’d be irresponsible not to be a member.

12. As organizations get larger, there seems to be a tendency to use the heavy-weight Type 1 decision-making process on most decisions, including many Type 2 decisions. The end result of this is slowness, unthoughtful risk aversion, failure to experiment sufficiently, and consequently diminished invention

Why cloud IT providers generate profits by locking you in, and what to do about it - Part 2 of 2

In the first article of this series, I spoke about how and why cloud IT providers use lock-in. I'll briefly revisit this and then focus on strategies to maintain buyer power by minimizing lock-in.

In the first article of this series, I spoke about how and why cloud IT providers use lock-in. I'll briefly revisit this and then focus on strategies to maintain buyer power by minimizing lock-in.

If you asked Kevin O'Leary of ABC's Shark Tank about customer lock-in with cloud providers , he might say something like:

"You make the most MONEY by minimizing the cost of customer acquisition and maximizing total revenue per customer. COCA combined with lock-in lets them milk you like a cow."

In short, they want to make as much profit as possible. So what do you do about it?

1. Avoid proprietary resource formats where possible. For example, both AWS and VMware use proprietary virtual machine formats. Deploying applications on generic container technologies like Docker and Google’s Kubernetes means you’ll have a much easier time moving the next time Google drops price by 20%

2. Watch out for proprietary integration platforms like Boomi, IBM Cast Iron, and so on. The more work you do integrating your data and applications, the more you’re locked in to the integration platform. These are useful tools, but limit the scope each platform is used for and have a plan for how you might migrate off that platform in the future.

3. Use open source where it makes sense. Like Linux, Openstack, Hadoop, Apache Mesos, Apache Spark, Riak and others provide real value that helps companies develop a digital platform for innovation. The problem is that talent is a real problem with opens source and much of the tech is still immature. Companies like Cloudera can mitigate this but they have their own form of lock-in to watch out for in the form of “enterprise distributions”.

4. Don’t standardize across the enterprise on proprietary platforms like MSFT Azure. Period. But don’t be afraid to use proprietary platforms for specific high impact projects if you have significant in house talent that aligned to that platform – while building expertise around alternatives like Cloud Foundry.

5. Make sure your vendors and developers use standards based service oriented approach. Existing standards like JSON, WSDL, Openstack APIs and emerging standards like WADL, VMAN, CIMI should be supported to the extent possible for any technology you choose to adopt.

Why cloud IT providers generate profits by locking you in, and what to do about it - Part 1 of 2

Here are two predictions for differences in industry evolution between Cloud IT and 20th century autos:

- The Cloud IT sector will consolidate an order of magnitude faster (perhaps two) than the auto industry did

- Future Cloud IT oligopolists will work to maximize customer retention by maximizing switching costs (creating lock-in)

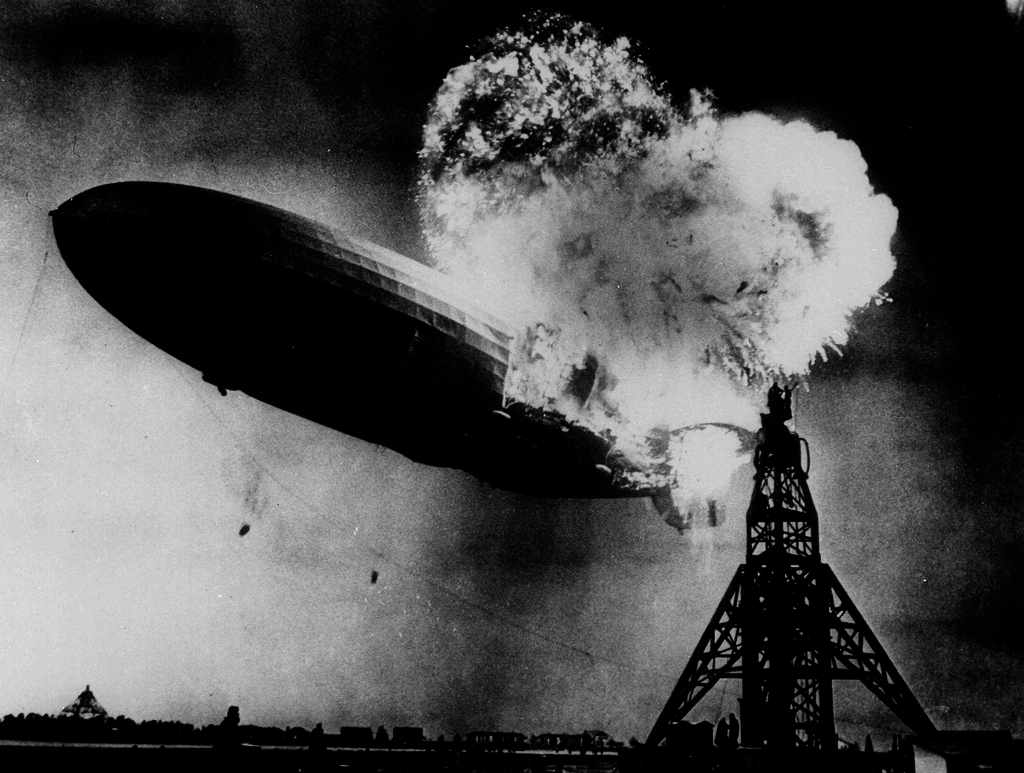

The comparison has been made before, but the Cloud IT sector today looks in many ways much like the automotive industry of the early 20th century.

During the first decade of the 20th century, more than 485 auto companies entered the market. After that explosive 1st decade a massive wave of consolidation followed, eventually resulting in dominance of just a few companies. This type of industry evolution - technological innovation at the outset, followed by a wave of commoditization, and then finally consolidation is common in markets with economies of scale. There will be differences though.

Here are two predictions for differences in industry evolution between Cloud IT and 20th century autos:

- The Cloud IT sector will consolidate an order of magnitude faster (perhaps two) than the auto industry did

- Future Cloud IT oligopolists will work to maximize customer retention by maximizing switching costs (creating lock-in)

Cloud IT providers are subject to the same business rules as businesses in any other sector. One of those rules is that, if you want to make long term sustainable profits (or “economic” profits for you MBA types), you must acquire customers and retain them. Technology driven value add (sustainable competitive advantage) can help acquire customers, but as more and more IT becomes commoditized , customer switching costs start to drive the pricing required for cloud providers to sustain profits.

While auto manufactures used economies of scale, styling and brand loyalty to generate economic profits, cloud IT providers have to deal with a steeper commoditization curve (in part driven by open source) and a lower threshold (but still large) for reaching economies of scale (minimum point of the long-run average cost curve). What this means is that commoditization happens more quickly as providers reach scale more quickly. In this environment, a loss leader plus high switching cost strategy makes a great deal of sense.

If you think about it for a moment, the prior generation of IT leaders (i.e. HP MSFT, IBM, CISCO, DELL, EMC, ORACLE, SAP) used a number of techniques to lock in their customers, so why not the next generation of IT leaders?

How cloud IT providers lock you in

One of the key needs of 21st century enterprises is to be able to service customers better in the digital realm. To do that they need to make sense of and monetize their data, all their data. That data lives in lots of different systems and has to be integrated to be useful. Cloud services typically provide APIs (application programming interfaces) to make this possible.

Lock-in method one: Enter the API

In the age of devops where everything in IT is being automated, cloud services are no exception. By using cloud service APIs, different data sets and applications can be connected in useful ways. An example use case is to use customer purchase information and social media activity to create a better support experience, manage inventory, and optimize pricing in real time.

But the more you automate, the more it costs to switch providers.

Cloud IT providers’ APIs are not standard in general, and often they’re highly complex For example, VMware had at least 5 distinct APIs for it vCenter application alone at one point. Lack of standards and complexity create additional rework, add to training and recruiting costs, and add significant time and risk to any transition to another provider. This is true for any cloud provider that has a product with an API.

In other words, complex, non-standard APIs drive profits for cloud providers.

Lock-in method two: Data Gravity

Data Gravity, a term coined by Dave McCrory (@mccrory) the CTO at Basho Technologies is a term used to describe the tendency for applications to migrate toward to the data as it grows. The idea is that as data grows, the difficulty of moving it to applications that use it becomes more difficult for several reasons, among them network costs, difficulties in accurately sharding the data, and so on.

Data is growing faster than bandwidth.

There are two power law effects (similar to Moore’s law) going on with respect to data. First, driven by trends like social media and the Internet of Things (IoT), enterprise data is growing at an astronomical rate. Second, network bandwidth is demonstrating robust growth as well, but is still growing much more slowly than data growth. The implication?

As time goes on, it gets harder to move your data from one provider to another. Amazon was likely thinking about this when they launched AWS Import/Export Snowball at Re:Invent last fall.

Like the proverbial drug dealer, the business model for many providers is to provide small scale services heavily discounted or free as part of the cost of customer acquisition. While cloud is easy to consume, as scale grows, cost grows not just in absolute terms but in percentage terms.

In my next post, I’ll delve into the best practices that help you avoid (or at least minimize) cloud lock-in.

If you found this post informative, please share it with your network and consider joining the discussion below.

For more insights, sign up for updates at www.contechadvisors.com or follow me on Twitter at @Tyler_J_J

The Virtualization and Cloud Efficiency Myth

By allowing administrators to partition up underutilized physical servers into ‘virtual’ machines, they could increase utilization and free up capital. Unfortunately that hasn’t happened for the most part. It’s a poorly held secret that server utilization in enterprise datacenters is much lower than most people think as virtualization reaches saturation with about 75% of x86 servers now virtualized.

At the beginning of the 20th century in the US, life was difficult for all but the upper class. While 80% of families in the US had a stay at home mother, the hours were grueling for both parents in the family. Technology driven innovation promised to change all that by making the life of a homemaker much more efficient and easier.

One can argue though that life didn’t become easier – while new technologies like refrigerators, vacuum cleaners, dish washers and washing machines might make life better, people seem to be working just as hard if not harder than in ages past. Technology increased the capacity for work, but instead of increasing leisure time, that excess capacity just shifted to other tasks. Case in point: The number of singer earner families dropped from 80% in 1900 to 24% in 1999.

Is this a good thing? Who knows, but if our lives aren’t better, it certainly isn’t the technology’s fault.

What does this have to do with virtualization and cloud?

Way back in 1998 when VMware was founded, virtualization presented a similar promise of ease and efficiency. By allowing administrators to partition up underutilized physical servers into ‘virtual’ machines, they could increase utilization and free up capital. Unfortunately that hasn’t happened for the most part. It’s a poorly held secret that server utilization in enterprise datacenters is much lower than most people think as virtualization reaches saturation with about 75% of x86 servers now virtualized.

Conversation between Alex Benik, Battery Ventures and a Wall Street technologist:

Alex: Do you track server and CPU utilization?

Wall Street IT Guru: Yes

Alex: So it’s a metric you report on with other infrastructure KPIs?

Wall Street IT Guru: No way, we don’t put it in reports. If people knew how low it really is, we’d all get fired.

Cloud isn’t any better. A cloud services provider I recently worked with found that over 70% of virtual machines customers provisioned were just turned on and left on permanently, with utilization under 20%. Google employees published a book with similar data. Not very ‘cloudy’ is it?

At this point, most cloud pundits would suggest a technological solution, like stacking containers or something…

Four reasons why virtualization and cloud don’t drive significantly better utilization

Jevon’s Paradox

Jevon’s paradox holds that as a technology increases the efficiency of using a resource, the rate of consumption of the resource accelerates. Virtualization and cloud make access to resources (i.e. servers, storage, etc.) easier. The key resource metric to think about here is from the user’s perspective: i.e. the number of workloads (not cpu cycles or GB of storage, etc.) This leads to server sprawl, VM sprawl, storage array sprawl etc. and hurts utilization because the increasing number of nodes make environments more complex to manage.

Virtualization vendors and cloud service providers don’t want you to be efficient

Take a look at licensing agreements from virtualization vendors. Whether it’s a persistent or utility license per physical processor, RAM consumed, per host, per VM, per GB, it doesn’t matter – the less efficient you are the more money they make. Sure companies like VMware, Amazon and Microsoft provide capacity management and optimization tools and they may even make them part of standard bundles, but your account team has a negative incentive for you to use them. Is that why they didn’t help with deploying the tool? And let’s be honest, if better usability reduces revenue, how much investment do you think the vendors are putting into user experience? Cloud is no better – if you leave all your VMs on and leave multiple copies of your data sitting around unused, does Amazon make more or less money? That’s why 3rd party software from vendors like Stratacloud and Solar Winds are important. Beware of capacity management solutions from the hardware, virtualization, and service providers, chances are they’re bloatware unless there is a financial incentive.

IT organizations don’t reward higher utilization

Okay, maybe this has been acceptable in the past but that’s changing. In an era of flat or declining IT budgets and migration of IT spending authority to other lines of business, spending valuable resources and time on capacity optimization has been pushed way down on the list of priorities. While meeting budget is an important KPI, utilization is typically not. Leadership has also become leery of ROI/TCO analysis, and rightly so with IT project failure rates resulting in organizations losing an average of US$109 million for every US$1 billion spent on projects. It’s not just about buying a tool to improve efficiency, application architectures and processes also need rework – all of this creates risk from an IT perspective.

Application architectures and processes need rework

Like in the early 20th century example above, better technology driven efficiency doesn’t necessarily help people achieve their objectives. Without improvements in processes (and organizations) better technology can lead to unintended effects (i.e. virtualization sprawl). As organizations acquire new skills - building application architectures that take advantage of cloud services, microservices, etc. this will change over time. But the pace of change will still be governed by organizational and process change, not technology change.

Software defined ‘X’

Many have heard about software defined networking (SDN), software defined datacenters (SDDC), network functions virtualization (NFV), and so on. At its core, these technologies are all about automation and ease of deployment. What we’ve found so far is that for the reasons above, this greater efficiency in provisioning new environments is likely to increase entropy, not decrease it. Only by making the needed changes in an organizations structure and processes, will that complexity be manageable. And this type of change will be much slower in coming than the technology itself.

Do you agree or disagree with any of the point I've made? Let's have that discussion in the comments below.

Why is Uber Investing in Data Centers instead of Running Everything in the Cloud?

Avoiding assets by owning no cars is a key part of Uber’s strategy, so why would Uber invest in data centers?

You might think that Uber, AirBnB, Pinterest, and all the other unicorns of the cloud native age are all about cloud; the notion of of building data centers is old school.

Not so fast

Avoiding assets by owning no cars is a key part of Uber’s strategy, so why would Uber invest in data centers? (Links here and here)

1. Data is a major source of competitive advantage (when used strategically).

Google understands that, Facebook understands that. What else do they have?

Data centers

Uber’s digital platform collects an incredible amount of data: Mapping information, our movements, preferences, connections are just a few of the elements in Uber’s data stores. This amount is massive, unique to Uber, and when combined creatively with other sources of data becomes a competitive weapon. Uber, like many others, is actively investing in developing additional capabilities, many of them digital; data is the critical piece underlying that strategy.

2. Data Gravity is at work

Data Gravity, a term coined by Dave McCrory (@mccrory) the CTO at Basho Technologies is a term used to describe the tendency for applications to migrate toward to the data as it grows. The idea is that as data grows, the difficulty of moving it to applications that use it becomes more difficult for several reasons, among them network costs, difficulties in accurately sharding the data, and so on. As time go on, data sets get bigger, and moving it becomes more difficult, leading to switching costs & lock in.

Because data is such a strategic asset to enterprises with digital strategies, it makes sense that they would build their own infrastructure around it (not the other way around. New digital capabilities in Social, Mobile, Analytics, and Cloud will certainly utilize different services providers, but data will continue to be central.

Amazon was the first to figure this out, but the list of companies utilizing a data first digital strategy is growing. Most recently, GE announced its Predix cloud service, a clear indication that they intend to launch many specialized cloudy IoT (or Industrial Internet) centric offerings that augment their existing businesses and create customer value and intimacy across both the physical and virtual world. As a $140+ Billion company that likely spends at least $5+ Billion on IT, it makes no sense that they would look to leverage a service provider, given that their internal IT services are larger than any service provider except for AWS.

GE won’t be the only one. In the not to distant future a big chunk of the Fortune 500 will have and internal, multi-tenant public cloud that delivers APIs, platforms, software and other cloudy services tightly aligned with their other offerings. Basically the definition of a Digital Enterprise.

Most people think Hybrid Cloud is about connecting private clouds to public clouds. I think private clouds will eventually be multi-tenant, meaning more and more companies like Uber and GE (yes I did use them both in the same sentence) will start to look just a little more like Amazon and Google.

Do you agree or disagree? Let's have that discussion in the comments below.

Is Cloud an "Enabler" or "Dis-abler" for Disaster Recovery?

While most people working for cloud providers (I used to work at one) will tell you that Disaster Recovery is a great use case for cloud, our panelists weren't so sure. The feeling in the room is that utilizing cloud environment in addition to traditional on premise environments created a bunch of operational complexity and it was safer to keep both production and DR in-house.

Yesterday in Atlanta I had the honor of moderating a Technology Association of Georgia (TAG) panel discussion on Operational Residence and the topic of cloud computing came up. While most people working for cloud providers (I used to work at one) will tell you that Disaster Recovery is a great use case for cloud, our panelists weren't so sure. The feeling in the room is that utilizing cloud environment in addition to traditional on premise environments created a bunch of operational complexity and it was safer to keep both production and DR in-house.

So which is it? Cloud providers are clearly making money selling DR services, but managing hybrid on-premise, cloud DR is difficult and challenging and you may not get the results you expect.

Are IT managers signing for cloud based DR services just to check a box, not knowing if it'll work when it's needed? Perhaps....

The reality is that when done correctly, cloud based DR services can help companies protect their operations and mitigate risk - but it's not easy.

For anyone tasked with developing an IT disaster recovery plan as part of their company’s business continuity plan, the alphabet soup of DR options talked about today by service providers, software vendors, analysts and pundits is truly bewildering. Against this backdrop, analysts like Gartner are predicting dramatic growth in both the consumption and hype of “cloudwashed” DR services.

I agree.

With the lack of standardization, it’s increasingly complex to map DR business requirements to business processes, service requirements and technology. Given this, how do you make sense of it all? For one thing, it’s critically important to separate the information you need from the noise, and the best way to do that is:

Credit: Stuart Miles, freedigitalphotos.net

Start with the basics of what you’re trying to do – protect your business by protecting critical IT operations, and utilize new technologies only where they make sense. Here are some things to think about as you consider DR in the context of modern, “cloudy” IT.

There is no such thing as DR to the cloud (even though cloud providers claim "DR to the Cloud" solutions).

There’s been a lot made lately about utilizing cloud technology to improve the cost effectiveness of Disaster Recovery solutions. Vendors, analysts, and others use terms like DRaaS, RaaS, DR-to-the-Cloud, etc. to describe various solutions. I’m talking about using cloud as a DR target for traditional environments, not Cloud to Cloud DR (that’s a whole other discussion).

There’s one simple question underlying all this though: If, when there is a disaster, these various protected workloads can run in the cloud, WHY AREN’T they there already?

Getting an application up and running in on a cloud is probably more difficult in a DR situation than if there isn’t a disaster. If security, governance, and compliance don’t restrict those applications from running in a cloud during a DR event, they should be considered for running in the cloud today. There are lots of other reasons for not running things in the cloud, but it’s something to consider.

You own your DR plan. Period.

Various software and services out there provide service level agreements for recovery time and recovery point objectives, but that doesn’t mean that if you buy it, you have DR. For example, what exactly does the word recovery mean? Does it mean that a virtual machine is powered up or that your customers can successfully access your customer support portal? The point is, except in the case of 100% outsourced IT, only your IT department can oversee that the end to end customer (or employee in the case on internal systems) processes will be protected in the case of disaster. There are lots of folks that can help with BIAs, BCDR planning, hosting, etc. that provide key parts of a DR solution, but at the end of the day, ultimate responsibility for DR lies with the IT department.

Everyone wants DR, but no one wants to pay for it.

I’ve had lots of conversations with and inquiries from customers asking for really aggressive DR service levels, and then when they hear about how much it’s going to cost, they back away from their initial requirements pretty quickly. The reality is that as objectives get more aggressive, the cost of DR infrastructure, software, and labor begins to approach the cost of production – and few businesses are able to support that kind of cost. Careful use of techniques like using test/dev environments for DR, global load balancing of active/active workloads, less aggressive recovery time objectives can drive the cost of DR down to where it should be (about 25% of your production environments’ cost), but be skeptical of any solutions that promise both low cost and minimum downtime.

Service provider’s SLA penalties never match the true cost of downtime.

Ok, let’s be honest - Unless you’re running an e-commerce site and you can measure the cost of downtime, you probably don’t know the true cost of downtime. Maybe you hired an expensive consultant and he or she told you the cost, but that’s based on an analysis with outputs highly sensitive to the inputs (and those inputs are highly subjective).

But that doesn’t mean that service provider SLA penalties don’t matter. Actually, strike that – service provider penalties don’t matter.

A month of services or some other limited penalty in the event of missing a DR SLA won’t compensate for the additional downtime. If it did, then why are you paying for that stringent SLA in the first place? The point here is that only a well thought out and tested DR strategy will protect your business. This leads me to my last point.

You don’t have DR if you don’t regularly test.

A DR solution is not “fire and forget”. To insure that your DR solution works, I recommend that you test at the user level at least quarterly. DR testing is also a significant part of the overall cost of DR and should be considered when building your business case. I’m sad to say, many of my customers do not test their DR solutions regularly (or at all). The reasons for this are many, but in my opinion, it’s usually because the business processes and metrics were never implemented by an initiative driven exclusively by IT technologists. My advice if you implement a DR solution and don’t test it: Keep your resume up to date, you’ll need it in the event of a “disaster”.

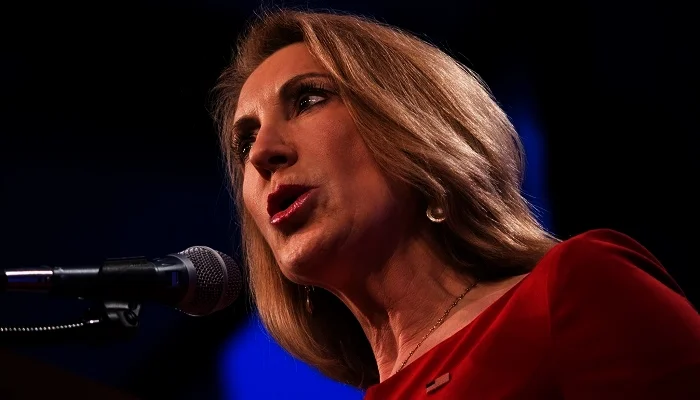

How Carly Fiorina' s Mistake Put Me on a Path to Entrepreneurship

None of this would have happened if Carly hadn’t made a mistake in firing all those HP salespeople and I hadn’t taken a risk.

Although successful with these large companies, something was always missing. I yearned to be able to create something on my own, and I’ve just taken an even bigger risk. After 12 years of planning, I left Rackspace in January to join the ranks of the entrepreneurs (or unemployed as some like to call us).

The aftermath of the 2002 acquisition of Compaq by HP was hard for many of us.

Carly Fiorina's "adopt and go" policy and general economic malaise had resulted in layoffs of entire departments; few groups were left unaffected. My group, the Richardson, VLSI Lab, based in Richardson, TX was no exception.

I remember a "coffeetalk" group meeting where managers lined up at the exit to grab subordinates who were slated for the “workforce reduction” program.

I'll never forget the knot in my stomach while "walking the gauntlet" and the feeling of relief when I passed my manager without incident. In 2002, I had 2 year old twins and had very little appetite for risk.

By the way, did anyone at HP really think re-branding layoffs as “workforce reductions” would soften the blow of being fired?

Kiran Vemuri, the engineer directly in front of me and my office mate was not so lucky. Or maybe he was – after being laid off, he eventually found his way to the Bay area and later spent 8 years with Apple. Not bad... (He works at a startup now, I think.)

A year later, I received an unexpected opportunity in the form of an email.

As part of Carly’s “adopt and go” policy, she had eliminated the bulk of the pre-merger HP enterprise sales organization and sales of HP-UX based (UNIX) systems sales were in free fall. Why did this mistake happen? As a veteran of the telecom sector (Alcatel), Carly had extensive experience with what was essentially a transactional technology sale. In other words, it was a relatively short sales cycle where price competition dominated and supply chain was the primary buyer. This approach fit well with a Compaq sales organization that was primarily focused on x86 “volume” server sales. It didn’t’ work well with sales of HP-UX systems, an $8 Billion HP business at the time, however. The sales cycle was often more than 6 months and sales reps had a much more complex sale on their hands. They needed to be able to understand and communicate the value proposition for servers running massive enterprise applications like Oracle and SAP. Buyers were often the technologists themselves, not supply chain. In other words, she thought the HP legacy sales force was "lazy".

Because most of the folks who could sell HP-UX servers were gone, Dan Brennan, the VP of HP-UX sales, put together a retraining program to train engineers to become sales people.

I’ll never forget the email – it came from our lab manager on a Sunday night and we had until only Wednesday to respond. It was like she didn’t want to send it but had to, probably because non of the volunteers would be back-filled and she had deadlines to meet. Volunteers would go through 6 weeks of sales training for this pilot and would then enter the sales force for a 6 month pilot.

I was about to enter the SMU Cox MBA program and this was a great opportunity to pivot my career. It was risky though. Layoffs ware probably not over and if the pilot program wasn’t accepted, we were pretty much at the front of the line for layoffs (despite assurances to the contrary).

Out of over 150 engineers in the HP Superdome chipset and microprocessor labs, Scot Heath, Jody Zarrell and I were the only ones to volunteer. (Since then, at least a dozen others have told me they wish they had signed up.) Scot, Jody and I weren’t the only folks in the program however, there were about 25 of us. Most were from the True64 Unix and Alpha engineering teams from the Compaq side. I guess they saw the writing on the wall as those programs were all gutted in short order.

It was a wonderful summer: We went through six weeks of training and soon found ourselves back in our respective homes across the US on various sales teams.

About 3 months later, Carly was on a worldwide tour of HP offices to communicate with employees about the new “end to end portfolio” strategy for the combined HP-Compaq. What a load of garbage, but she made it sound really, really good. If you’ve never seen her speak in person, her charisma and presence are simply stunning. I’m not at all surprised to see her in politics.

I decided that this was a great time to flex my new sales muscles in front of the more than 700 HP employees packed into the Richardson hotel conference center. My heart felt like it was pounding out of my chest as I stepped up to the microphone to ask my question.

Carly: Hi, what’s your name?

Me: Hi, I'm Tyler Johnson.

Carly: Oh, great name, my first boyfriend’s name was Tyler.

(Silence – you could have heard a pin drop while her statement put me off script and I paused.)

Me: I’m sorry but I’m taken.

(All 700+ employees, including Carly, explode with laughter for several seconds until it quiets down enough for me to ask my question)

I can’t for the life of me remember my question…

A few months later, the pilot program changed hands again with a management reorganization and the sales program was in danger of being cut. I remember holding my new twin daughter in a common area of the Scottish Rite hospital in Dallas after her hip exam waiting for a call from a new manager I barely knew to notify me if I had a job or not.

(Yes, I have 2 sets of twins! And I’m now an Entrepreneur?)

Good news, I still had a job.

I remember being really afraid of losing my income during such a critical time but it all worked out. The next 10 years saw great success: I graduated with high distinction from SMU with my MBA and I’ve had a distinguished career with HP, then NetApp and most recently with Rackspace. (all great companies)

None of this would have happened if Carly hadn’t made a mistake in firing all those HP salespeople and I hadn’t taken a risk.

Although successful with these large companies, something was always missing. I yearned to be able to create something on my own, and I’ve just taken an even bigger risk. After 12 years of planning, I left Rackspace in January to join the ranks of the entrepreneurs (or unemployed as some like to call us).

I’ve already made mistakes: I’ve been through 2 iterations already and am in the process of starting a third.

Success consists of going from failure to failure without loss of enthusiasm. – Winston Churchill

My experience has taught me that a combination of taking calculated risks and tenacity will take me to a better place. And that place is probably nowhere near what I imagined it to be. Putting myself out there and being willing to work harder and longer than others is what has and will continue to make all the difference. Even if I’d been laid off along the way, it wouldn’t have mattered. Many or even most of the folks laid off at HP and other places I’ve been ended up in much better situations. I’ve learned that if you focus on improving and working hard, the risk of losing your job is perceived, not real.

Two roads diverged in a wood and I - I took the one less traveled by, and that has made all the difference. – Robert Frost

David Litman, co-founder of hotels.com taught me the difference between real and perceived risk in a conservative entrepreneurship course I took that he taught at SMU back in 2004. Most people over estimate the risk of being an entrepreneur and underestimate the risk of having a “steady” paycheck. Why? One word – fear. And not just fear, but irrational fear.

Are you willing to work harder and longer than your colleagues, engage in careful planning for years only to fail multiple times before you succeed, give up on materialist expectations for a particular standard of living in the near term (and possibly long term), and trust yourself to do something remarkable?

If so, it’s a race worth running and it’ll take you to a better place.

Thank you to all the people who've helped me on this amazingjourney so far.

I’m currently in pre-launch startup mode in the cloud computing space – I’m open to collaboration and/or I’d love to hear your thoughts, ideas and feedback.

For more insights, read my blog at www.contechadvisors.com/blog or follow me on Twitter at @Tyler_J_J

Will GE's Industrial Cloud Flourish Like AWS or Fizzle like New Coke?

It's all about user generated data. You might think that GE is a product company, and you'd be right. But it's also a services company that services millions of industrial devices in the energy, transportation, healthcare, and manufacturing sectors. These devices create literally exabytes of data, data which GE currently uses to service and maintain it's customers equipment and gain insights on product design, and in the future will be used for much more.

The year was 2011.

I was attending Cloud Connect in Santa Clara and having a coffee with Victor Chong, my friend and colleague from the HP days. He had just wrapped up a mobile app startup and was settling into a new job at eBay. While we had a nice time catching up, there was something he said that stuck with me.

One of the main things he learned from his experience in the brutal mobile app space was that it was "all about the data". You can build the best app in the world, but eventually, the owners of the data will find a way to squeeze your profits.

Back to 2015.

Seems obvious now doesn't it? With Facebook, Google, Uber, etc., we see how companies use data to transform industries and gain and/or maintain market dominance. Build a gigantic network of users, use the data you get from those users to dominate - pretty simple right? (Notice I didn't say "easy")

What does this have to do with GE's Predix Cloud Announcement?

Everything.

It's all about user generated data. You might think that GE is a product company, and you'd be right. But it's also a services company that services millions of industrial devices in the energy, transportation, healthcare, and manufacturing sectors. These devices create literally exabytes of data, data which GE currently uses to service and maintain it's customers equipment and gain insights on product design, and in the future will be used for much more.

With $148+ Billion in sales, GE holds a sizable chunk of the industrial IoT (Internet of Things) market captive

In a stroke of genius, Amazon's Jeff Bezos, Chris Pinkham and others were able to develop a general purpose technology platform (public cloud) for a specific corner use case (ecommerce book store) and then extend that technology to create & transform other markets. In other words, for AWS to exist, they needed amazon.com to exist first. And for Amazon the bookstore to be sustainable and have the scale that would later enable AWS and Amazon as a general purpose eCommerce site, they had to have millions of customers as a bookstore first.

Build a network of users in a narrow corner case you can dominate, build a general purpose extensible technology platform to service that business, use that platform to dominate other segments. That is what GE is doing - they already have the network of devices, and the platform is there as well.

"GE businesses will begin migrating their software and analytics to the Predix Cloud in Q4 2015" - GE Press Release

There are others using the same strategy:

- Tesla: Started with developing technology to dominate the luxury electric car market - moving into general automotive and energy sectors.

- Uber: Built a multi-sided platform that connected millions of riders and drivers - moving into package delivery.

Can GE compete with the cloud heavyweights like Amazon, Google, MSFT, etc?

Did I say it's all about the data? I did, but it's also all about the execution. Here are some pros and cons for why GE might be successful with Predix Cloud.

Cons:

- Cloud incumbents already have a significant head start in operating large scale public clouds. Things like billing, SLA management, capacity management are far more complex when you're a cloud operator.

- Incumbents have a massive stable of world class talent and innovation track record - indicators that the pace of innovation isn't likely to slow any time soon.

- Many incumbents have strong leadership with experience leading companies in quickly changing industries.

Pros:

- GE has plenty of talent and experience as an IT service provider. Gartner others estimate that enterprises spend anywhere from 3% to 6% or revenue on IT. This puts GE's annual IT spend at between $4.5 and $9 Billion. They're already a major player in IT and if you assume that 40% of the spend goes to employees and a fully burdened IT salary is $150k, then they've got between 12 and 24 thousand IT employees.

- They're not starting from zero. As Barb Darrow mentioned in her article about the GE announcement on Forbes, GE invested $105 million in Pivotal over two years ago. The number of Cloud Foundry, big data and cloud experts at GE is not public knowledge, but if they'll spend $100M in Pivotal, I bet they'll have invested heavily in talent as well.

- The field is wide open. Public cloud infrastructure, as it exists today, is not yet ready for the scale needed to handle 100's of billions of devices. McKinsey, for example, estimates that the market for IoT devices by 2020 will be 20-30 billion units.

- They're the experts in industrial control systems.

- They're not going it alone. GE is developing partnerships with key technology players like Intel and Cisco. Look for more of this type of ecosystem development in the near future.

According to Jack Welsh, former CEO, GE would not compete unless it thought it could be first or second in an industry.

I don't know if that's still a core tenet of GE leadership, but I'm guessing they think they can lead the emerging Industrial Internet. And I wouldn't bet against them.

Agree? Disagree? I'd love to learn from you.

How to think about Cloud Computing's "Alphabet Soup"

As mature enterprises look to gain flexibility and customer intimacy while startups look for speed and new business models, a federated model for IT is emerging. Some call it "hybrid" cloud (don't get me started on all the cloud-washing going on) But it's really more than just the infrastructure.

The situation in IT has become ridiculously complex.

ServiceNow, Mulesoft, Stratacloud, New Relic, Splunk, Basho Technologies, Rightscale, App Dynamics, Solar Winds, NexDefense and "8000" other companies occupy the increasingly crowded IT tools space. Consider the infrastructure providers of things like converged infrastructure and hyper-converged infrastructure. And don't forget about traditional tech vendors like IBM and VMware.

As mature enterprises look to gain flexibility and customer intimacy while startups look for speed and new business models, a federated model for IT is emerging. Some call it "hybrid" cloud (don't get me started on all the cloud-washing going on) But it's really more than just the infrastructure.

Depending on your situation, you could have a need for applications you manage yourself in your data centers, applications hosted by others in their data center in a managed services or SaaS model or any one of potentially thousands of combinations of in house and third party combinations.

How does one navigate all this complexity? Established companies, especially those over say $50 million in revenue, are likely to have a need for multiple platform types for the foreseeable future.

1. Know where you're going. IT strategists need to understand that the future of IT dictates a service management model that is capable of delivering IT across the increasingly complex landscape of IT delivery models. This federated model for IT service management is not possible today, but pieces of it do; companies need to begin preparing for it and will capture significant competitive advantages along the way, which leads to the second point.

2. Minimize rework (or "Technical Debt"). We can take a page from Jeff Bezo's mandate to Amazon employees in 2002 where he directed his developers to focus on standard interfaces (APIs) and modularity among other things. This is what lead to the creation of AWS and cloud computing in general. Companies need to adopt a zero new technical debt stance for developing internal applications as well as carefully consider the potential for creation of technical debt when considering tools, architectures, and vendors for new projects. Bezo's list is here:

- "All teams will henceforth expose their data and functionality through service interfaces.

- Teams must communicate with each other through these interfaces.

- There will be no other form of inter-process communication allowed: no direct linking, no direct reads of another team’s data store, no shared-memory model, no back-doors whatsoever. The only communication allowed is via service interface calls over the network.

- It doesn’t matter what technology they use.

- All service interfaces, without exception, must be designed from the ground up to be externalizable. That is to say, the team must plan and design to be able to expose the interface to developers in the outside world. No exceptions."

3. Start with a Hybrid Cloud foundation. While hybrid cloud service management matures, every company will be presented with opportunities to become more digital. In the order of operation, start with developing a hybrid cloud delivery capability from an organizational perspective as well as architectural perspective. This is not just tech, although tech is a part of it.

4. Focus on the customer. Business process, application enhancements, and infrastructure enhancements should be prioritized based on improving customer intimacy. This is all about:

- Interacting with customers where they are (social media).

- Empowering your employees by providing them tools to communicate to other employees and customers, access to data they need at the time they need it for insights, and the ability to work effectively wherever they are.

5. Get Help. Very few organizations have the capabilities required for a digital transformation. Given the complexities, and knowledge required around cloud service management, security, business process redesign, successful companies will recognize this and build ecosystems of partners and vendors to bring all the needed skills together. Companies that try to do this by hiring alone will usually fail.

Agree? Disagree?

Let me know what you think by commenting on this post or reaching out to me directly!

Will your IT strategy turn you into the "Walking Dead"?

To be relevant to the business, CIOs have to talk about more than cost and uptime – they need to be able to build momentum by educating their business counterparts on how these emerging technologies can transform a company from a business perspective.

Why is CIO turnover down? One word – Digital.

It wasn’t long ago that the joke was: “My 2 year car lease will last longer than the CIO”.

Times have changed, though. With the average CIO tenure approaching 5 1/2 years, he or she can probably pay off a standard car loan on that brand new Tesla model S they’ve been eying.

Why? CIOs are increasingly involved in business strategy as IT moves from a cost center to an investment center. And strategy takes time. Companies are also now competing with strange new entrants:

“Uber, the world’s largest taxi company, owns no vehicles. Facebook, the world’s most popular media owner, creates no content. Alibaba, the most valuable retailer, has no inventory. And Airbnb, the world’s largest accommodation provider, owns no real estate. Something interesting is happening.”

- Tom Goodwin, TechCrunch

Digital natives are disrupting industries across the globe – this is why outgoing Cisco CEO John chambers talked about the coming disruption ahead driven by digital.

“Forty percent of businesses… will not exist in a meaningful way in 10 years" and “70% of companies would "attempt" to go digital but only 30% of those would succeed.”

- Cisco CEO John Chambers

At either a conscious or subconscious level, most senior leaders understand the need to make technology a more deliberative part of their strategy planning process, (hence the growing importance of the CIO role in strategy) but change is difficult, and transformative change is extremely difficult.

“The IT discipline within most enterprises has developed a set of behaviors and beliefs over many years, which are ill-suited to exploiting digital opportunities and responding to digital threats.”

-Gartner

Before anything happens, the executive dialogue needs to change. Most business spend between 3 and 5 percent of revenue on IT. Cost cutting in IT can at most shave off 1% of overall IT cost.

“I went to the CEO and told him I could cut IT costs by 20% by moving to the “cloud”. He responded that a 20% reduction in a cost that was 3% of revenue, or a total margin improvement of 0.6% wasn’t exactly “strategic”. He also said my predecessor tried the cloud and the way it was implemented it actually turned out to be more expensive than before.”

- Newly “Liberated” CIO job seeker

To be relevant to the business, CIOs have to talk about more than cost and uptime – they need to be able to build momentum by educating their business counterparts on how these emerging technologies can transform a company from a business perspective.

Once the leadership team is on board, cloud, big data, social and mobile all have to be part of a cohesive technology and business strategy of transforming the business over time. And this will take time and investment, if companies wait until they’re being disrupted by newcomers, it’s already too late.

The CIO plays a pivotal role in all of this, which is to bridge the business and technology strategy chasm.

A great first step towards digital transformation for CIO is to develop a hybrid cloud strategy that enables both migration and maintenance of legacy applications and incorporation of new technologies like big data analytics, data visualization, social data integration, etc.

Design your hybrid cloud foundation to minimize technical debt (rework)

15 years ago it was virtualization, then it was cloud, and now we’re seeing the emergence of new container technologies like Docker emerge – all layers of abstraction intended to increase utilization and improve flexibility. Even with all these technologies, IT infrastructure in general is still dramatically underutilized when compared to other types of capital equipment.

It always amazes me to see companies still managing IT assets by spreadsheet, even virtual ones.

Develop a service management strategy that is designed for hybrid cloud architectures and is easy to deploy and manage. Key attributes are security integration, 3rd party vendor interoperability, reference architectures, extensibility with standard APIs, intelligent automation of standard management tasks like provisioning and de-provisioning of virtual and physical resources, managing role based access, capacity management, SLA management and monitoring.

Once a solid foundation is in place, target applications with the best ROI in terms of improving a company’s business. These are typically applications that are customer facing, or contain data that, when combined with other data and platforms (i.e. social, mobile) and/or could be most easily ported to hybrid architectures.

CIOs also need to gradually transform their organizations from a capabilities perspective and move their organization from traditional methods like application up time, response time and traditional Capex methods of measuring infrastructure consumption to business outcome based metrics. When moving from traditional on premise to hybrid, it’s a good time to implement tools that can not only accurately measure infrastructure usage in both on and off premise environments ties to applications, but can also be easily integrated into other components of a digital enterprise platform, like using Flume or Sqoop (depending on data source) to integrate infrastructure cost and performance data into a Hadoop cluster when data analytics is tying these costs to social media (tweets about your product for example) Imagine correlating user interface design changes to Facebook likes for example – very powerful.

Change is hard - it will take organizations intense effort over a span of years to become truly digital. All functions from IT to HR, finance, marketing, sales, R&D, and operations will have to work together to to transform their organizations and business processes in addition to technology. More than ever, the CIO must provide business leadership in additional to technical leadership..